The Genesis of Image & Video Processing AI

What if you place AI where it belongs - at the very beginning of the imaging process?

When people think of AI and cameras, it is always in post process.

For over a decade we at SUB2r have been working on Neural network Image Process (NnIP AI).

Neural network Image Process (NnIP AI) significantly improves the quality of an image while drastically reducing the computational power necessary to process data.

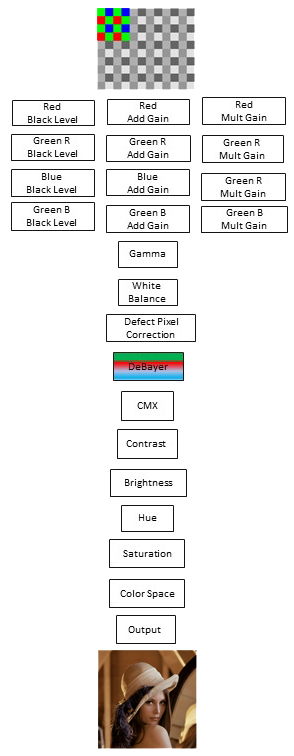

Traditional Pipeline

The imaging pipeline used in almost all cameras today is a cumbersome and computationally expensive process where data is mathematically processed – packaged – handed off to the next step – unpacked – next process...

While refinements have been made it has remained the fundamental process since 1975.

Power of NnIP

Neural network combines linear algorithms into a singular process.

It requires a fraction of the

computational power of a linear system.

The advantages of NnIP:

- Overall video quality improvement (compound algorithm)

- Background removal on camera (introduce static and dynamic background via secondary inputs)

- Low light video reconstruction/enhancement

- Reduced processing power

- Reduced component cost

The Magic

To capture the genesis of a pixel requires a camera engineered at the circuit level to capture this data – it can’t be done after a camera is built.

SUB2r has the only camera designed with the capability to access this information.

NnIP requires three things:

- Knowing the value of a pixel the moment the electric charge (analog) on the camera chip is converted to a numeric value (digital)

- Knowing the value of that exact same pixel at various steps along the imaging pipeline

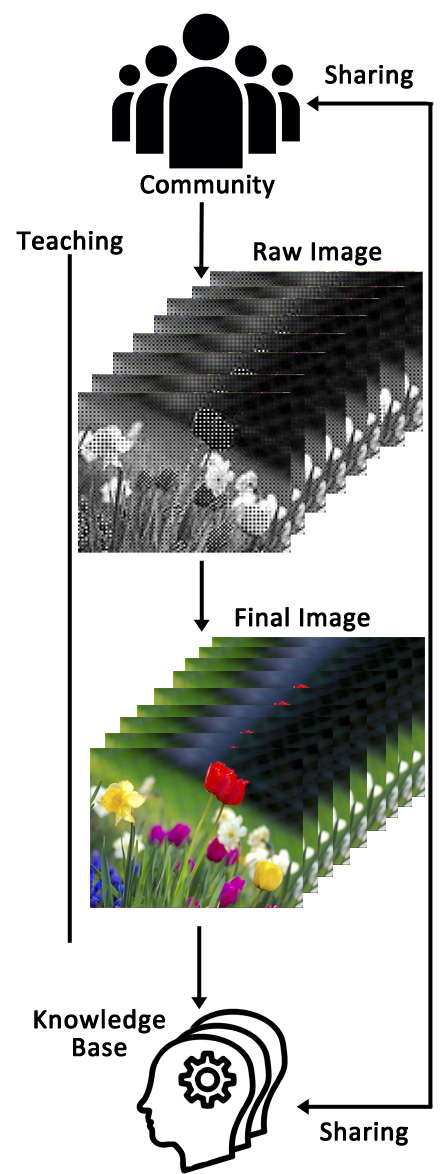

- Having millions of matched pair frames of data to teach the NnIP

Next Gen Image Pipeline

To capture the next state of the pixel, the firmware has to be coded with the hooks allowing for the extraction of pixel-level data.

The pipeline has to be designed to capture matched pairs and format those pairs into data sets that can be

transferred to a centralized knowledge base.

SUB2r cameras have been engineered at the lowest circuit level and coded at the

logic gate level to collect the information necessary to generate the millions of data sets necessary to train the NnIP.

AI Beyond The Camera - Into The Studio

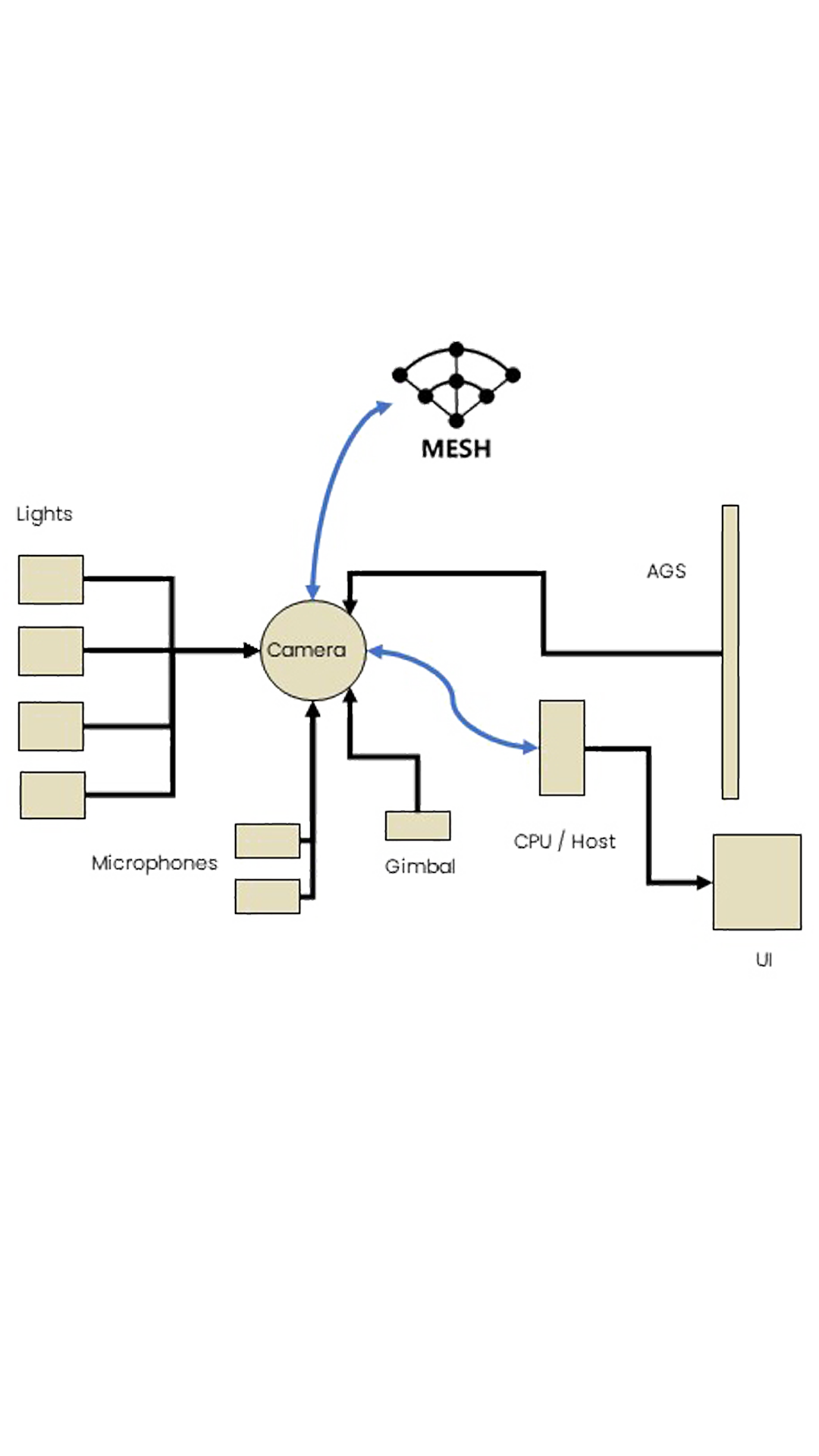

SUB2r cameras have the ability to connect to the outside world controlling external devices such as lights, our AGS, gimbals and other devices I2C enabled.

Instead of the camera having to adapt to the environment, the camera can adapt the environment to the camera to produce the best quality video image possible.

Beyond the studio, the camera can connect multiple systems across a mesh network. The AI can extend to controlling multiple nodes within the mesh. Each node being a camera and supporting external devices.